RACares: a conceptual design to guide mHealth relational agent development based on a systematic review

Highlight box

Key findings

• We introduce a conceptual design for mHealth relational agents (RAs) focusing on user engagement, personalized interventions, data monitoring, and multimodal accessibility.

What is known and what is new?

• A RA is a digital tool designed to establish a social and emotional connection with users, specifically addressing their health and well-being concerns.

• When integrated into mobile devices, these RAs can act as personal health assistants and their easy accessibility can transform healthcare delivery, especially for individuals in remote areas.

What is the implication, and what should change now?

• The conceptual design has a wide range of applications in mHealth, such as patient education, mental health counseling, and chronic disease management.

• This design holds significance in digital health research, offering a blueprint for the creation of more effective RAs that can potentially revolutionize healthcare delivery and support.

Introduction

Relational agents (RAs) are basically software-based virtual agents designed to interact with humans in a natural, human-like manner, with the goal of establishing and maintaining a relationship with the user (1,2). These agents are typically implemented as mobile applications that run on a range of mobile devices, including smartphones and tablet computers. Mobile health (mHelath)-based RAs are paving the way for a new era of personalized healthcare. Their capabilities stretch beyond mere data collection, offering users a more interactive and empathetic health journey. From providing daily health tips, medication reminders, to emotional support during challenging health episodes, these RAs act as constant companions. Their adaptability allows them to cater to a wide spectrum of health needs, from mental well-being support to chronic disease management. As technology and healthcare converge further, the potential for mHealth-based RAs is vast, signifying a move towards more holistic, user-centric healthcare solutions.

Compared to other health applications, RAs strive to build a user’s trust in the system by showing empathy and providing multi-modal, easy-to-use communication channels. In particular, they use natural language to communicate with users, provide support, and personalize interactions to meet individual needs (3). RAs have been used in a variety of settings, including healthcare (4), and education (5), where they have shown the potential to improve user outcomes through ongoing support, education, and motivation.

The evolution of mHealth has brought a heartening change to the tapestry of healthcare services. In a world often dominated by clinical settings and sterile interactions, the newest mHealth innovations usher in a wave of warmth, understanding, and personalized care. Integrating RAs into mHealth solutions, the stars of this revolution, act as more than just digital interfaces; they are akin to compassionate companions that stand by patients’ side, understanding their needs, and offering timely advice. The truly magical fact is their ability to bring healthcare right to our doorsteps so there is no longer a need of seeking medical advice confined to hospital corridors or clinic waits for non-life threatening scenarios, e.g., typical flu, headaches, etc. With these agents, expert guidance is but a touch away, wrapped in a cloak of empathy and care. This is not just technology advancing; it is healthcare becoming more human, more accessible, and more tailored to each of us.

In healthcare contexts, RAs have been used to address a wide range of issues, including chronic disease management, mental health, and rehabilitation, e.g., RAs that were presented in (6-9). They have shown promise in improving patient outcomes, enhancing patient engagement, and reducing healthcare costs. One of the key benefits of using RA for healthcare purposes is their ability to provide personalized support to patients. Through ongoing interactions, these agents can learn about users’ preferences, behaviors, and needs, and tailor their responses accordingly (10,11). This level of customization has the potential to improve treatment adherence and patient satisfaction. RAs can also provide support in a variety of formats, including text-based messaging, voice assistants, and virtual reality environments. This flexibility makes them well-suited to meet the needs of diverse patient populations and healthcare settings.

It is evident that RAs, especially when applied to the realm of healthcare delivery or related health services, have garnered significant attention due to their potential to transform patient care. However, as with any intervention, there are potential negative or unanticipated outcomes to consider. For example, some patients might over-rely on the RAs, neglecting to consult human healthcare professionals (HCPs) when necessary (12). This can be dangerous if the RA is not equipped to handle complex medical queries or emergencies. Another negative outcome can be about miscommunication and misunderstanding of user input. RAs can sometimes misinterpret user input, leading to inappropriate responses (13). Moreover, there is a risk of patients misunderstanding the guidance given by RAs (14). In the context of healthcare, such misunderstandings can have dire consequences. When it is about empathy, although RAs can simulate empathy, they do not “feel” in the human sense (15). There may be scenarios where a genuine human touch, understanding, or empathy is crucial, and a RA might fall short. On the other hand, if patients become too attached or emotionally reliant on RAs, it could potentially interfere with their real-world social interactions or deter them from seeking human help when needed (15,16). Overall, these potential issues warrant the importance of designing RAs in healthcare with careful considerations for safety, efficacy, and cultural relevance. It is also vital to ensure that users are educated about the strengths and limitations of such systems, ensuring that they are used appropriately as supplements to, rather than replacements for, human HCPs.

While RAs are closely related to conversational agents (CAs), there are some important differences between the two that justify studying them separately (17,18). RAs are specifically designed to build and maintain long-term relationships with users, whereas CAs are typically designed for more specific tasks or interactions. RAs often incorporate features such as personalization, emotion recognition, and adaptive behavior that are not commonly found in CAs. Despite their potential to improve patient outcomes, RAs have received relatively little attention in the healthcare context. In a 2009 manuscript, “Relational Agents: A Critical Review”, Campbell et al. (14) examined the use of RAs in healthcare and education. The review highlighted that while RAs are a natural and intuitive way to improve patient care and educational outcomes, very few currently exist and research in this area is sparse. In a more recent review, “Human-like communication in conversational agents: a literature review and research agenda”, Van Pinxteren et al. (19) investigated the effects of communicative behaviors used by CAs (such as chatbots, avatars, and robots) on relational outcomes (equivalent to studying CAs’ ability to act like RAs). The authors classified all the behaviors, tested across the selected 61 studies, into two major categories: modality (verbal, nonverbal, appearance) and footing (similarity, responsiveness).

Based on the critical reviews discussed above, it can be inferred that RAs are still a new and evolving healthcare technology. While preliminary evidence suggests that they have the potential to improve patient outcomes, limited guidance is available regarding their development. In this manuscript, we propose a conceptual design, ‘RACares’, that provides a structured approach to designing effective and user-friendly mHealth-based RAs while encouraging experimentation and innovation. An effective and user-friendly RA is able to understand and interpret human language and provide appropriate responses. It is able to recognize and respond appropriately to users’ emotions, including showing empathy and using an appropriate tone of voice. A fast and accurate response to user requests or questions is also a key feature, minimizing the user’s wait time. Personalization is also important, with the RA being able to tailor its responses to the user’s preferences and needs, such as by recognizing their name or remembering previous interactions. Additionally, the RA should provide accurate information consistently, avoiding errors or misunderstandings. Our aim is to facilitate the exploration of new designs and approaches to developing RAs that can meet the diverse needs of healthcare users. To the best of our knowledge, ‘RACares’ represents the first attempt to provide a comprehensive guide for developing RAs in healthcare contexts. By offering this guidance to designers, developers, and researchers, we hope to support the ongoing evolution of this technology and its integration into the healthcare industry to improve patient outcomes. Furthermore, we hope that it will be useful while analyzing and interpreting the existing RA designs. We present this article in accordance with the PRISMA reporting checklist (available at https://mhealth.amegroups.com/article/view/10.21037/mhealth-23-46/rc).

Methods

The objective of this research was to create a conceptual design that enables the exploration and advancement of innovative mHealth-based RA designs specifically tailored to provide healthcare. We used a systematic literature review followed by qualitative analysis to develop the conceptual design.

Systematic literature review

The purpose of the systematic literature review was to collect data and analyze the existing research on RAs. Below we describe our methodology in detail. This review was not registered in any register and no review protocol was prepared.

Search strategy

Between May and September 2022, we conducted an exhaustive electronic search for relevant articles by using the keywords “relational agent”, “virtual agent”, and “conversational agent” in IEEE Xplore Digital Library, ACM Digital Library, PubMed, EBSCO, and Web of Science Core Collection. These databases were chosen because they mainly include healthcare and technology-related publication venues and have been previously used in systematic literature reviews covering comparable topics (14,19-22).

Study selection criteria

Articles that were qualified for the review had to pass the following criteria:

- Articles that described the use of RAs in healthcare contexts.

- Experiments, evaluations, pilot studies, or randomized controlled trials (RCTs) that included a detailed design of the RA.

- Articles that assessed the design or outcomes of RAs post user’s interactions with the respective agents.

- Peer-reviewed journal publications, book chapters, or refereed conference papers published in English.

Abstracts, theses/dissertations, and papers published in languages other than English were excluded. Publications targeting chatbots, and studies that did not include interactions between an RA and a human user (i.e., conceptual video prototypes, or non-interactive simulation) were also excluded. The searches were limited to titles and abstracts, and the language was limited to English. Additionally, upon the availability of such filtering options, we restricted the searches to the document types of Conference Paper, Article, Book Chapter, and Book.

Screening, data extraction, and synthesis

All articles discovered by the searches were imported into a reference management tool, Zotero. Two researchers first screened the entire corpus by reading titles and abstracts to remove all the duplicates. After comparing and finalizing the remaining article list, each researcher independently screened the articles by first reading the article titles, then the abstracts and lastly the full text in the light of inclusion and exclusion criteria specified above. After each of these phases, Cohen Kappa (23) was computed to assess the inter-reviewer reliability and level of agreement. Any differences in opinion were discussed and resolved by consensus.

The final article list was independently reviewed by two researchers who became familiar with the articles by reading and re-reading them. Data from each article was then extracted into an Excel spreadsheet under the following topics: general information about the included studies, type and brief description of characteristics of RAs, targeted healthcare contexts in the studies, design elements of studied RAs, RAs assessment metrics, primary reported results, and findings, type of study participants, and any specific recommendations about system development of future RAs. When data extractions were completed, the researchers met to compare their results; any discrepancies were examined and resolved by consensus.

Qualitative analysis

The extracted data were analyzed in two separate rounds. In the first round, we applied a generalized inductive approach to generate a feature taxonomy. The purpose of the second round was to develop a design conceptual design based on the feature taxonomy.

During the first round, two researchers read and re-read the collected data on the existing studies to develop codes using an open coding approach. The codes were then assigned to the collected data, and any disagreements were resolved by having a discussion with the third researcher. Afterwards, codes with similar content were merged and grouped to form sub-themes. Subsequently, the sub-themes were clearly defined and categorized within the main themes. The themes became the top-level categories (features) in our taxonomy and the codes became the lowest-level values (of features). A narrative synthesis of the reconciled data was then used to develop each theme’s description.

During the second round, we utilized a generalized deductive approach to analyze the data. Building on the inductive analysis and feature taxonomy developed during the first round, we conceptualized a set of themes (stages) for the conceptual design. We then re-organized the data into categories and subcategories and assigned them to the identified themes. Afterwards, we refined the conceptual design by defining relationships between the stages and the modules or controllers where required.

Results

Feature taxonomy

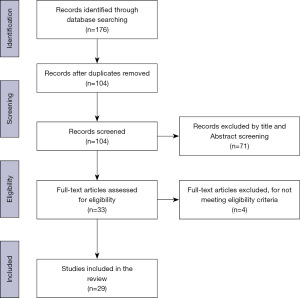

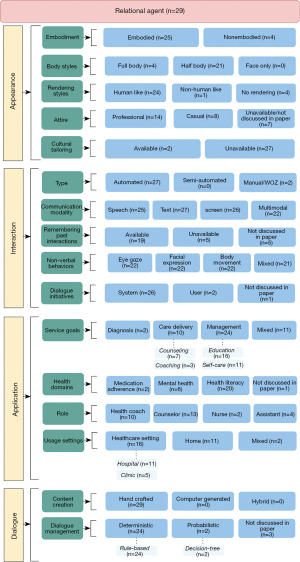

Figure 1 presents the PRISMA flow diagram of our method, which led to the identification of 29 articles. These articles are referenced as follows: (1), (3), (6), (7), (11), and (24-47) that were then used to develop the feature taxonomy. Four major feature categories, i.e., appearance, interaction, application, and dialogue were identified through our analysis. This was used to create the taxonomy shown in Figure 2. Below, we present a description of each feature.

Appearance

This category covers visual aspects, including shape, form, feature, style, etc., of the RA. In other words, the graphical appearance of the RA. Five characteristics of appearance were identified: embodiment, body styles, rendering styles, clothing styles, and cultural tailoring.

RAs can be broadly categorized into two types: embodied and non-embodied agents. Embodiment refers to the design of a computational agent to mimic human physical characteristics and behaviors, such as facial expressions, gestures, and body movements (1,11,47). They can also adapt their behavior to different situations and contexts, making them particularly useful in applications where social interaction and physical presence are important, such as healthcare or education. By incorporating these human-like qualities into an RA, it can create a more engaging and effective interaction between the user and the agent (2). Non-embodied RAs, on the other hand, are agents that do not have a physical form or body. These agents can be implemented as software programs or applications that run on a range of devices, such as mobile phones or desktop computers. Non-embodied agents rely on text or voice-based communication to interact with the user, and they do not provide non-verbal cues or gestures. They are typically used for applications such as customer service or information retrieval, where social presence and physical interaction are less important. The key difference between embodied and non-embodied agents is their physical presence and ability to provide non-verbal cues and gestures. Embodied agents are more human-like in their interaction and can establish a stronger relationship with the user, while non-embodied agents are more focused on information processing and task completion.

In terms of style, RAs may have a human-like appearance, such as a 2D avatar with realistic features, while others may be more abstract and stylized. A more human-like body style may create a greater sense of familiarity and comfort, while a more abstract style may be better suited for certain applications where a more stylized appearance is desired (8,11,47). An additional aspect of body style was the presence of movement and animation.

Twenty-five out of 29 RAs were described as having a rendering, that is, human-like (24 RAs) or non-human (1 RA) appearance. Twenty-one RAs had half-body visualization, compared to 4 who had full-body visualization. In keeping with their roles, 14 RAs wore professional attire, whilst 8 RAs were dressed in casual clothing. Twenty-seven RAs were not specifically tailored to any culture or user community, whereas 2 RAs were.

Interaction

This category describes how the exchange of information took place between users and RAs. It includes five sub-categories: interaction type, interaction modality, remembering previous interactions, non-verbal behaviors, and dialogue initiation.

For building effective relationships with the users in terms of the ability to provide personalized, adaptable, and long-term interactions, reviewed RAs used several techniques as follows:

- RA was moving/walking on the screen and greeting the user (n=14).

- RA conducted social and empathic chat (i.e., RA actively engages with the user, asking questions, and showing interest in their thoughts and feelings) at each interaction with the user (n=8).

- RA assessed the user’s behavior and feedback since the last interaction with the user (n=13).

- RA provided tips, guidelines, or relevant educational material to the user, e.g., managing side effects of the medicine if the RA dealt with medication adherence, coping with mental health issues if the RA worked on the mental well-being of the user, etc. (n=20).

- RA was setting new behavioral goals for the users to work towards before the next interaction (n=14).

- A farewell exchange with the user and after which the RA walks off the screen or disappears from the screen (n=12).

Five RAs were unable to recall previous interactions with users, while 19 RAs were able to do so. Voice was used by 25 RAs to interact with users, while 27 RAs used text. Additionally, 26 RAs displayed multiple-choice options or pre-defined utterances on displays, and 22 RAs made use of multimodal mechanisms.

In terms of non-verbal behaviors, 22 RAs delivered non-verbal communication by using eye gazing, 22 RAs used facial expressions, 22 RAs used body movements, and 21 RAs used a combination of multiple non-verbal cues. The majority of the dialogue was initiated by the RA, with 26 RAs starting the conversation initially, while 2 RAs responded to user inputs.

Application

This category describes the usage of the RA, i.e., for what and where the RA was designed to function. It is divided into 4 sub-categories: RA’s services, targeted health domains, RA’s roles, and context of use. From the reviewed RAs—diagnosis and screening of any health issues were based on questioning and answering between the RAs and the users; coaching and counseling tasks and self-care management had been offered based on various existing literature and therapy models, e.g., cognitive behavioral therapy (CBT).

Out of the 29 RAs, 2 were designed for diagnosis and screening, 10 for providing care through coaching and counseling, 24 for education and self-care management, and 11 had mixed goals. Among the RAs, 10 acted as health coaches, 13 as counselors, 2 as nurses, and 4 as assistants. Additionally, 16 RAs were designed for healthcare settings, 11 for home use, and 2 for both locations.

Regarding the health domains, 20 RAs focused on health literacy, while only 2 and 6 RAs addressed mental health and medication adherence issues, respectively.

Dialogue

This category is concerned with how the RAs’ dialogues were created and managed by the developers. It has two major aspects: content creation and dialogue management. The dialogue was manually created by designers with the assistance of domain experts and existing theories for specific healthcare contexts. Among the 29 RAs, 24 utilized a deterministic, rule-based strategy to manage conversations, while 2 used a probabilistic decision tree approach.

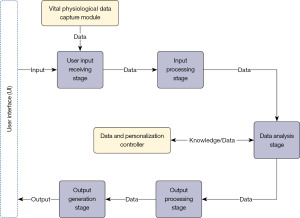

Proposed conceptual design

In the previous section, we introduced four key features that we need to consider while designing RAs. In this section, we propose a conceptual design (Figure 3) to support common behaviors and design decisions that have been used for health-related RAs.

The conceptual design has five independent stages with one supporting module and one data controller. A stage can be defined as a logical grouping of related functionalities (components) to achieve specific goal(s). Stages take input as data from other input sources or stages. The outputs of each stage become inputs for subsequent stages. A module or controller provides supporting functionalities where required. Providing multimodal capabilities, the initial (input stage) and the last (output stage) stages interface with a variety of data as inputs and outputs, such as text, voice, and data.

The logical organization of the stages in the conceptual design can be described as input > process > analyze > output. Below, we describe each entity within the conceptual design in detail.

User input receiving stage

This stage accommodates all input types that can be obtained from the user via the user interface (UI). The input modalities are dependent on RA’s appearance and the user’s devices, e.g., smartphones, tablet computers, and other mHealth devices. It can take one or multiple of the following forms, e.g., text, audio, clicks (on pre-defined utterance options on the screen), user’s gesture data, device’s accelerometer or sensor data, and user’s eye gaze data. These are obtained by the stage, which then transforms those data into a format that other stages and modules can use and sends the results to the Input Processing Stage.

The Vital Physiological Data Capture Module is connected to this stage where the module can record essential physiological measurements, including blood pressure, body temperature, heart rate, and body oxygen saturation. Typically this module can be integrated into the UI through smartwatches or fitness bands connected to the mHealth devices. The purpose of this module is to help the RAs to check their users’ vital health conditions. Based on the daily activities (e.g., step counts) recorded from smartwatches or fitness bands can assist the RAs to provide better tailored coaching or recommendations to the users.

However, research shows that different kinds of end-users prefer different kinds of input modalities. For example, low literacy users prefer icons or voice-activated UIs (48-50). Therefore, depending on the target population’s characteristics, various input modalities can be tested and incorporated within the RA.

Input processing stage

The input processing stage deals with processing the user input so that the system (RA) can recognize them in feasible formats. Due to the advantage of receiving processed and compatible data regardless of user input types and device types and the data being suitable for analysis, the input processing stage is designed separately from the Data Analysis Stage in this conceptual design.

This stage needs to be designed to process multimodal data coming from users so the RA can understand. For example, this stage should have the component to transform spoken words or audio signals into textual content, which is frequently used as a command for the RA. The transformed textual content from the user can be used for various analyses including sentiment analysis, emotion analysis, etc.

Data and personalization controller

The Data and Personalization Controller is a collection of a knowledge base (KB), a database (DB), and a mechanism of human intervention. This controller generally resides under a central server and the RAs can access it remotely. This controller is responsible for providing necessary data and commands to the data analysis stage. However, it takes and stores data from the same stage. All the data processed and stored by this controller is secured and encrypted so that only the Ra and eligible personnel can access the data. In particular, in cases where user or patient identification is not required, this controller only stores data anonymously for preserving users’ privacy.

An online machine-readable self-serve centralized repository of knowledge on a particular service, function, or subject is called a KB (51). In this conceptual design, the KB available inside this stage is used to store information and knowledge regarding care guidelines, preventative measures, medication advice, coaching and counseling content, etc. When there is an update for a particular topic or piece of content, the KB might be updated. For example, a new guideline for COVID-19 vaccination or any other health issues is available and the KB is updated with the newer version of it so that the RAs can deliver up-to-date information to the users or patients.

Besides a KB, Data and Personalization Controller takes advantage of a database for storing and retrieving dynamic information and data from the RAs. In order to refer to the previous reaction states for the next reaction states, the Reaction Controller feeds its processed reaction instructions as data to this database. There is a need for a scheduler to update data for the Reaction Controller. The data updating must be continuous, and the scheduling must be consecutive and error-free. The scheduling should be either time interval-based or event-based but may vary due to the varied development processes and programming approaches used for different functionalities of RAs. The designers can set a design requirement, which the developers can follow, to fix the scheduling time interval in the scheduler. However, the database can be updated and modified with necessary data by the responsible authority, e.g., hospital administration. This database helps the overall RA system to obtain the necessary data for performing and generating outputs. The synced data should be anonymous to safeguard the security and privacy of users’ data but may be synchronized with minimal identifying information depending on the usage contexts or the agreement reached by the RA system administrators and users. For example, when the RA is provided by a hospital authority to its patients for homecare then the hospital personnel need to check the patient’s health records and, in that case, the data needs to be synchronized with patients’ identification information. However, the data protection policy must be followed while transmitting sensitive data.

The Data and Personalization Controller also provides a human-intervention channel for requesting instruction from a domain expert on serving the healthcare context or a peer when appropriate, taking into account the need for a peer intervention from the findings of the user studies in this research. This controller process and converts those instructions into system-compatible data streams or commands so that the RA can execute them to the users through output generation.

Most importantly, this controller helps the RA system adapt the information to a specific user in accordance with that user’s interests, preferences, and capacities. The RA’s output can be configured through this controller in order to match and support the user’s preferences.

Data analysis stage

User inputs that have previously been handled by the input processing stage are analyzed by the data analysis stage. Although several data such as voice, facial expression, gesture, motion, physiological vitals, and eye gazing must be processed in order to be compatible with this stage. This stage analyzes the processed inputs with the aid of the Data and Personalization Controller since the controller has access to secured databases and a knowledgebase of user data and health-related knowledge. This stage includes different analyzing components for user intent detection, sentiment analysis, emotion analysis, discussion topic analysis, and health data analysis. The output of this stage is transferred to the next stage (output processing stage) and can be stored in the database for refining future interactions.

Based on the user inputs, the intent detection component analyzes for recognizing the user intents, i.e., what the user wants to do or what the RA is to accomplish. Every goal-oriented conversational technology requires intent detection to produce effective responses based on user inputs. The conversational system must utilize its intent detector to categorize the user’s voice or textual utterance into one of many predetermined categories or intents in order to interpret the user’s intended objective (52). The RA can choose which domain or event it needs to work on based on the intent categories.

The sentiment analyzing component examines the emotions that are communicated in a text. Employing a sentiment analysis technique, a conversational system can detect whether a given input has feelings that are neutral, positive, or negative. To estimate and understand the overall sentiment state of user input, the purpose is to automatically identify and classify sentiments stated in the text (53). The sentiment analysis component in this stage enables the RA to comprehend the user’s sentiment throughout the interaction and apply the necessary methods to provide responses that will boost the user’s mood or health.

The expression on faces is among the best markers of human moods and emotions. The most important strategy for social communication and interpersonal interaction is facial expression. The facial expression works as an expressive portrayal of a person’s emotional and mental states (54). The RA must first assess the user’s emotional state and personality type in order to be effective in generating cognitively sensitive conversational responses, and then it must react in a manner that includes empathy and naturalness to the interactions (55). Given that the user’s end device is able to collect and transmit facial expression data in real-time throughout the interaction with the RA, this stage may use an emotion analyzing component to identify the user’s emotional points of view.

In order to identify the topic with which the user wishes to communicate, the topic analyzing component must analyze the data provided by the user. This component aids the analysis stage in comprehending the user’s interests and intended goals for engagement with the RA based on the identified topic.

The user’s vital physiological data are the only ones that the health data analyzing component evaluates. This component determines if the user’s vital signs are under control or cause for worry using pre-defined thresholds specified by HCPs or RA’s target health service specialists. This feature specifically aids the RA in determining if the user needs immediate attention and emergency assistance from HCPs or hospital personnel. The safety of the users or patients is ensured by routine cross-checking and revision of the health condition measurements provided in this component due to the sensitive nature of this kind of health data.

When required or demanded by the users, a peer or human actor can deliver instructions or data via the Data and Personalization Controller to this stage so it can analyze data and generate response content accordingly.

In this analytical stage, the RA is equipped to process a multitude of data types and content. One primary facet is symptom analysis: the RA evaluates user-reported symptoms in tandem with essential health data. From this assessment, the RA can identify potential medical conditions. If a condition appears non-critical and falls within the RA’s informational purview, guidance can be given. However, in scenarios beyond its knowledge or if there’s any doubt, the RA should direct users to seek professional medical counsel.

Additionally, the RA serves a pivotal role in medication adherence by examining a user’s medication schedule and dispatching reminders, thereby reinforcing adherence to therapeutic protocols. Furthermore, the RA’s analytical capabilities extend to evaluating users’ dietary habits, delivering insights or recommendations aligned with individual dietary constraints or needs.

Beyond these, the RA’s continuous monitoring of health metrics, including heart rate, sleep trends, and physical activity, facilitates the detection of health patterns or anomalies over time. By interpreting text or voice inputs, the RA can deduce a user’s emotional state, making it capable to offer timely mental health support. Determining a patient’s emotional state is vital in healthcare service contexts; as a result, the RA uses sentiment analysis to tailor its interactions accordingly.

Output processing stage

To enable the RA to give outputs, the output processing stage creates content for the RA. To provide acceptable responses, this stage integrates the inputs from the data analysis stage. This stage may make use of a single or several rule-based, machine learning (ML)-based, or keyword matching-based content production techniques. This stage sends output to the output generation stage for generating both verbal responses and non-verbal embodiment cues according to the context of the RA’s response.

Output generation stage

Output generation stage can utilize the template-based approach to deliver RA’s responses to the users. This stage customizes the output based on the users’ preferences and devices at users’ ends. It controls the sequence of actions and responses with the help of the response planning and action scheduler components.

Besides verbal response generation, in the non-verbal cues generation phase, this stage creates the embodiment characteristics of RA, including facial expression, hand gestures, movement of the body, etc. It creates non-verbal utterances that are shown as visual selections on the UI’s display so that users may simply touch or click on those options without speaking. Along with the verbal output, RA’s facial expressions are created together by maintaining appropriate lip-syncing.

Utilizing a reaction manager component that selects what the RA should be doing through non-verbal cues at any particular point in time. According to a set of pre-defined states, the reaction manager helps the output generation stage produce non-verbal outputs aligned with the textual and verbal content of the RA’s responses. The system produces reaction states when the user and the RA have exchanged the initial interaction. Based on the target domain of the RA’s application, states inside the reaction manager can be modified and updated.

Discussion

The conceptual design presented in this paper offers a unique block-based design that caters to the dynamic and rapidly evolving technology landscape. Our conceptual design strategically builds upon available frameworks and workflows (1,3,6-10,17,28), seeking to address limitations and advance the efficacy of such systems. Historically, many frameworks for RAs, e.g., RAs in (1,20,25,30) have been either rigid or highly generic in terms of design and development, hindering adaptability and specificity for the multifaceted world of healthcare. In these initiatives, researchers have relied on established design templates (1,2,11,24) when conceptualizing and prototyping RAs. Our conceptual design builds upon core principles from existing RA frameworks identified in the systematic literature review, emphasizing user-centered design (UCD), adaptability, and empathy. However, it further refines these elements to better cater to the specific demands of healthcare settings, especially within the realm of mHealth. One distinct feature of our design is its modular, block-based structure. This is inspired by extant workflows but augmented for greater flexibility and scalability. Whereas traditional workflows often linearly guide RA development, our block-based approach allows for the dynamic integration of emerging technologies and research insights. This not only ensures our RAs are technologically contemporary but also clinically pertinent, especially when considering their adaptability.

Furthermore, while many existing RA designs in healthcare draw from generic CA theories, our conceptual design prioritizes frameworks rooted deeply in health communication, ensuring the agent’s interactions resonate with patients’ psychological and emotional needs. This emphasis makes our design more attuned to the sensitive and complex dynamics of patient-provider interactions. This design’s adaptability ensures that as new technologies emerge, RAs can remain at the forefront of healthcare delivery. Moreover, it offers a structured yet flexible blueprint for designers, making it a valuable asset for both innovation and systematic development. Also, the robustness of our design is also reflected in its ability to seamlessly integrate with advanced ML algorithms. While many existing workflows for RAs acknowledge the importance of adaptability, our design pushes the envelope by fostering real-time, adaptive learning. This ensures that our RAs evolve with each interaction, making them more context-aware and responsive.

In addition, researchers often need a conceptual design to guide their thought processes when designing experiments or developing concepts. Since this conceptual design incorporates empirically tested RA features, it should allow researchers to experiment with new ideas. Moreover, the independently operating stages of the conceptual design should allow researchers to explore each in-depth and conceptualize further enhancements. In other words, we anticipate that this conceptual design will be very useful in advancing the RA technology, particularly in mHealth development.

The conceptual design can be used in conjunction with existing conversational bot-building software such as Google DialogFlow (56). DialogFlow-like tools provide a platform for building CAs that can understand natural language input and respond appropriately. The platform uses ML algorithms to analyze user input and generate responses. However, these responses are typically based on predefined rules or templates, and the agent may not be able to engage in a more nuanced conversation that goes beyond a simple question-answer exchange. To incorporate the conceptual design for developing an RA into this kind of platform, the agent’s responses can be programmed to take into account the user’s emotional state, personality, and past interactions with the agent. This requires the integration of additional ML algorithms that can detect and interpret emotional cues in user input and use that information to tailor the agent’s responses accordingly. For example, if a user is expressing frustration or dissatisfaction with the agent’s responses, the agent can respond in a more empathetic and understanding manner, acknowledging the user’s feelings and attempting to address their concerns. The agent can also use information from past interactions to personalize its responses to the user, making the conversation feel more natural and engaging.

The integration of our conceptual design of mHealth RA into existing frameworks from health communication or social presence research can be understood through several overlapping areas including theoretical foundations in health communication, social presence and relational dynamics, and trust and credibility (13,57). RAs can be designed to address the perceived barriers, benefits, and susceptibility related to the target health service or behavior. Health communication theories, e.g., the health belief model (58), social cognitive theory (59), and the theory of planned behavior (60), emphasize the role of beliefs, self-efficacy, and social influence in healthcare delivery. mHealth RAs can leverage the concept of social presence theory (61), i.e., the feeling that another is “present” in a mediated environment. By embodying certain human-like qualities, these RAs can provide a sense of companionship, thereby influencing users’ perception and experience of the system, which is a critical component in the social presence theory. RAs, with their conversational abilities, can evoke a sense of ’social presence’, making the interaction feel more personal and human-like, which is especially important in sensitive areas like health.

Moreover, one of the major advantages of digital health tools, including mHealth RAs, is their ability to personalize feedback and interventions based on user data. This aligns well with personalized health communication strategies (62), enhancing their efficacy. As we mentioned in our conceptual design, RAs can be programmed to deliver health services that are tailored to an individual’s needs, preferences, and characteristics, building upon the framework of personalized health interventions (62). Many health communication strategies rely on feedback to ensure that messages and interactions are understood, and behaviors are being modified (63). Our proposed design enables RAs to provide real-time feedback, ensuring immediate correction or reinforcement and the health communication strategies can be adopted here. Drawing from self-determination theory (SDT), RAs can be designed to provide supportive feedback in a way that satisfies the user’s basic psychological needs, thereby enhancing motivation (64).

In addition, effective health communication requires cultural competence. Integrating concepts from cultural health communication theory (65), the RA can be designed to respect and respond to the cultural needs of the user. mHealth RAs can be programmed to understand and respect cultural nuances, offering advice and information that’s culturally appropriate. Similarly, just as with human HCPs, the credibility and trustworthiness of mHealth RAs are paramount. The source credibility theory (66) can be applied, where the expertise and trustworthiness of the RA play roles in message acceptance.

Despite its advantages, the conceptual design also has several limitations, particularly in healthcare contexts. The proposed conceptual design can provide guidelines for creating RAs, but it may not be comprehensive enough to cover all possible scenarios. Healthcare contexts can be complex, and this conceptual design may not be able to capture all the nuances of patient interactions. Our proposed conceptual design is useful for standardizing the development process, but it can also limit creativity and innovation. Developers may feel constrained by the conceptual design and may not be able to explore new ideas or approaches. Our proposed conceptual design has not been validated through empirical studies, making it difficult to assess its effectiveness in producing successful RAs. Moreover, RAs can collect sensitive data about patients, such as their health status and personal information. Our conceptual design may not provide sufficient guidance on how to handle this data ethically, potentially leading to privacy breaches or other ethical issues. Moreover, the conceptual design does not provide any specific directions to increase trust and transparency while interacting with users and it does not guide to deliver more human-like characteristics and interactions.

In summary, our conceptual design for mHealth RAs pays homage to foundational principles from existing workflows but pioneers a more flexible, context-specific, and technologically advanced approach. We believe this robust combination positions our design at the forefront of healthcare RA development, promising more effective and empathetic patient engagements. Researchers may further explore the effectiveness and usability of the conceptual design that is outlined in this article. As a result, separate user evaluation studies may be conducted, and the evaluation results can then be used to compare the two versions in terms of acceptability, effectiveness, usability, and design issues. However, design feedback from the user studies will help to refine the current conceptual design to perform better.

Conclusions

A comprehensive conceptual design of an mHealth RA named ‘RACares’ that can be used in a variety of non-life-threatening healthcare contexts is described in this article. The proposed conceptual design represents the first attempt to provide a comprehensive guide for developing mHealth RAs. The outcomes of the systematic review served as the inspiration for this conceptual design. The cornerstone of this design is its block-based structure, fostering flexibility and scalability, especially crucial given the rapid evolution of technology. By aligning with recent advancements in computing and digital health, RACares aims to produce RAs that can understand and interpret human emotions, tailoring their responses to individual user needs. While the merits of the design are manifold, particularly its potential for driving advancements in RA technology, it is not without its constraints. The healthcare landscape is intricate, and the design might not encapsulate all aspects of patient interactions. It is pivotal to recognize the significance of data privacy in the health sector, and our design might require additional layers to ethically handle sensitive patient data. To this end, we advocate for further empirical studies to validate and refine the current conceptual design, ensuring it resonates with the evolving needs of the healthcare community.

Acknowledgments

Funding: None.

Footnote

Reporting Checklist: The authors have completed the PRISMA reporting checklist. Available at https://mhealth.amegroups.com/article/view/10.21037/mhealth-23-46/rc

Peer Review File: Available at https://mhealth.amegroups.com/article/view/10.21037/mhealth-23-46/prf

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://mhealth.amegroups.com/article/view/10.21037/mhealth-23-46/coif). B.M.C. serves as an unpaid editorial board member of mHealth from March 2023 to February 2025. The other authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Bickmore TW, Mitchell SE, Jack BW, et al. Response to a Relational Agent by Hospital Patients with Depressive Symptoms. Interact Comput 2010;22:289-98. [Crossref] [PubMed]

- Bickmore T, Cassell J. Relational agents: a model and implementation of building user trust. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 2001:396-403.

- Bickmore T, Schulman D. Practical approaches to comforting users with relational agents. In: CHI’07 Extended Abstracts on Human Factors in Computing Systems, 2007:2291-6.

- Richards D, Caldwell P. Improving Health Outcomes Sooner Rather Than Later via an Interactive Website and Virtual Specialist. IEEE J Biomed Health Inform 2018;22:1699-706. [Crossref] [PubMed]

- Kabir MF, Schulman D, Abdullah AS. Promoting Relational Agent for Health Behavior Change in Low and Middle - Income Countries (LMICs): Issues and Approaches. J Med Syst 2019;43:227. [Crossref] [PubMed]

- Prochaska JJ, Vogel EA, Chieng A, et al. A Therapeutic Relational Agent for Reducing Problematic Substance Use (Woebot): Development and Usability Study. J Med Internet Res 2021;23:e24850. [Crossref] [PubMed]

- Puskar K, Schlenk EA, Callan J, et al. Relational agents as an adjunct in schizophrenia treatment. J Psychosoc Nurs Ment Health Serv 2011;49:22-9. [Crossref] [PubMed]

- Islam A, Chaudhry BM. Acceptance evaluation of a covid-19 home health service delivery relational agent. In: Lewy H, Barkan R, eds. Pervasive Computing Technologies for Healthcare, Springer, Cham 2022, pp. 40-52.

- Vardoulakis LP, Ring L, Barry B, et al. Designing relational agents as long term social companions for older adults. In: International Conference on Intelligent Virtual Agents, Springer 2012, pp. 289-302.

- Chaudhry BM, Islam A. A Mobile Application-Based Relational Agent as a Health Professional for COVID-19 Patients: Design, Approach, and Implications. Int J Environ Res Public Health 2022;19:13794. [Crossref] [PubMed]

- Bickmore T, Schulman D, Yin L. Maintaining Engagement in Long-term Interventions with Relational Agents. Appl Artif Intell 2010;24:648-66. [Crossref] [PubMed]

- Bickmore TW, Picard RW. Establishing and maintaining long-term human-computer relationships. ACM Transactions on Computer-Human Interaction 2005;12:293-327. (TOCHI). [Crossref]

- Bickmore T, Gruber A, Picard R. Establishing the computer-patient working alliance in automated health behavior change interventions. Patient Educ Couns 2005;59:21-30. [Crossref] [PubMed]

- Campbell RH, Grimshaw MN, Green GM. Relational agents: A critical review. The Open Virtual Reality Journal 2009;1:1-7. [Crossref]

- Lucas GM, Rizzo A, Gratch J, et al. Reporting mental health symptoms: breaking down barriers to care with virtual human interviewers. Frontiers in Robotics and AI 2017;4:51. [Crossref]

- Kiesler S, Powers A, Fussell SR, et al. Anthropomorphic interactions with a robot and robot–like agent. Social cognition 2008;26:169-81. [Crossref]

- Morris RR, Kouddous K, Kshirsagar R, et al. Towards an Artificially Empathic Conversational Agent for Mental Health Applications: System Design and User Perceptions. J Med Internet Res 2018;20:e10148. [Crossref] [PubMed]

- Clark L, Pantidi N, Cooney O, et al. What makes a good conversation? challenges in designing truly conversational agents. In: Proceedings of the 16 2019 CHI Conference on Human Factors in Computing Systems, 2019 pp. 1-12.

- Van Pinxteren MME, Pluymaekers M, Lemmink JGAM. Human-like communication in conversational agents: a literature review and research agenda. Journal of Service Management 2022;31:203-25. [Crossref]

- Laranjo L, Dunn AG, Tong HL, et al. Conversational agents in healthcare: a systematic review. J Am Med Inform Assoc 2018;25:1248-58. [Crossref] [PubMed]

- Provoost S, Lau HM, Ruwaard J, et al. Embodied conversa- tional agents in clinical psychology: a scoping review. J Med Internet Res 2017;19:e151. [Crossref] [PubMed]

- Vaidyam AN, Wisniewski H, Halamka JD, et al. Chatbots and Conversational Agents in Mental Health: A Review of the Psychiatric Landscape. Can J Psychiatry 2019;64:456-64. [Crossref] [PubMed]

- McHugh ML. Interrater reliability: the kappa statistic. Biochem Med (Zagreb) 2012;22:276-82. [Crossref] [PubMed]

- Bickmore, T, Pfeifer, L. Relational agents for antipsychotic medication adherence. In: CHI’08 Workshop on Technology in Mental Health, 2008.

- Prochaska JJ, Vogel EA, Chieng A, et al. A randomized controlled trial of a therapeutic relational agent for reducing substance misuse during the COVID-19 pandemic. Drug Alcohol Depend 2021;227:108986. [Crossref] [PubMed]

- Bickmore TW, Pfeifer LM, Jack BW. Taking the time to care: empowering low health literacy hospital patients with virtual nurse agents. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 2009 pp. 1265-74.

- Baker, S, Richards, D, Caldwell, P. Relational agents to promote ehealth advice adherence. In: Pacific Rim International Conference on Artificial Intelligence, 2014 pp. 1010-5.

- Félix IB, Guerreiro MP, Cavaco A, et al. Development of a Complex Intervention to Improve Adherence to Antidiabetic Medication in Older People Using an Anthropomorphic Virtual Assistant Software. Front Pharmacol 2019;10:680. [Crossref] [PubMed]

- Bott N, Wexler S, Drury L, et al. A Protocol-Driven, Bedside Digital Conversational Agent to Support Nurse Teams and Mitigate Risks of Hospitalization in Older Adults: Case Control Pre-Post Study. J Med Internet Res 2019;21:e13440. [Crossref] [PubMed]

- Rubin A, Livingston NA, Brady J, et al. Computerized Relational Agent to Deliver Alcohol Brief Intervention and Referral to Treatment in Primary Care: a Randomized Clinical Trial. J Gen Intern Med 2022;37:70-7. [Crossref] [PubMed]

- Thompson D, Callender C, Gonynor C, et al. Using Relational Agents to Promote Family Communication Around Type 1 Diabetes Self-Management in the Diabetes Family Teamwork Online Intervention: Longitudinal Pilot Study. J Med Internet Res 2019;21:e15318. [Crossref] [PubMed]

- Zhou S, Bickmore T, Rubin A, et al. A relational agent for alcohol misuse screening and intervention in primary care. In: CHI 2017 Workshop on Interactive Systems in Healthcare (WISH), 2017.

- Magnani JW, Ferry D, Swabe G, et al. Design and rationale of the mobile health intervention for rural atrial fibrillation. Am Heart J 2022;252:16-25. [Crossref] [PubMed]

- Magnani JW, Schlusser CL, Kimani E, et al. The Atrial Fibrillation Health Literacy Information Technology System: Pilot Assessment. JMIR Cardio 2017;1:e7. [Crossref] [PubMed]

- Guhl E, Althouse AD, Pusateri AM, et al. The Atrial Fibrillation Health Literacy Information Technology Trial: Pilot Trial of a Mobile Health App for Atrial Fibrillation. JMIR Cardio 2020;4:e17162. [Crossref] [PubMed]

- Bickmore TW, Caruso L, Clough-Gorr K. Acceptance and usability of a relational agent interface by urban older adults. In: CHI’05 Extended Abstracts on Human Factors in Computing Systems, 2005 pp. 1212-5.

- Wang C, Bickmore T, Bowen DJ, et al. Acceptability and feasibility of a virtual counselor (VICKY) to collect family health histories. Genet Med 2015;17:822-30. [Crossref] [PubMed]

- Buinhas S, Cláudio AP, Carmo MB, et al. Virtual assistant to improve self-care of older people with type 2 diabetes: First prototype. In: García-Alonso J, Fonseca C, eds. Gerontechnology. International Workshop 18 on Gerontechnology. Cham: Springer 2019 pp. 236-48.

- Balsa L, Neves P, Félix I, et al. Intelligent virtual assistant for promoting behaviour change in older people with t2d. In: Moura Oliveira P, Novais P, Reis L, eds. Progress in Artificial Intelligence. EPIA Conference on Artificial Intelligence, Cham: Springer 2019 pp. 372-83.

- Balsa J, Félix I, Cláudio AP, et al. Usability of an Intelligent Virtual Assistant for Promoting Behavior Change and Self-Care in Older People with Type 2 Diabetes. J Med Syst 2020;44:130. [Crossref] [PubMed]

- Gaffney H, Mansell W, Tai S. Agents of change: Understanding the therapeutic processes associated with the helpfulness of therapy for mental health problems with relational agent MYLO. Digit Health 2020;6:2055207620911580. [Crossref] [PubMed]

- Dworkin MS, Lee S, Chakraborty A, et al. Acceptability, Feasibility, and Preliminary Efficacy of a Theory-Based Relational Embodied Conversational Agent Mobile Phone Intervention to Promote HIV Medication Adherence in Young HIV-Positive African American MSM. AIDS Educ Prev 2019;31:17-37. [Crossref] [PubMed]

- Bickmore T, Vardoulakis L, Jack B, et al. Automated pro- motion of technology acceptance by clinicians using relational agents. In: Aylett R, Krenn B, Pelachaud C, et al., eds. Intelligent Virtual Agents. IVA 2013. Berlin: Springer 2013 pp. 68-78.

- Bickmore TW, Caruso L, Clough-Gorr K, et al. It’s just like you talk to a friend: relational agents for older adults. Interacting with Computers 2005;17:711-35. [Crossref]

- Holter MT, Johansen A, Brendryen H. How a Fully Automated eHealth Program Simulates Three Therapeutic Processes: A Case Study. J Med Internet Res 2016;18:e176. [Crossref] [PubMed]

- Bickmore TW, Mauer D, Brown T. Context Awareness in a Handheld Exercise Agent. Pervasive Mob Comput 2009;5:226-35. [Crossref] [PubMed]

- Bickmore T, Schulman D. The comforting presence of relational agents. In: CHI’06 Extended Abstracts on Human Factors in Computing Systems, 2006 pp. 550-5.

- Chaudry BM, Connelly KH, Siek KA, et al. Mobile interface design for low-literacy populations. In: Proceedings of the 2nd ACM SIGHIT International Health Informatics Symposium, 2012 pp. 91-100.

- Chaudhry BM, Schaefbauer C, Jelen B, et al. Evaluation of a food portion size estimation interface for a varying literacy population. In: Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, 2016 pp. 5645-57.

- Siek KA, Connelly KH, Chaudry B, et al. Evaluation of two mobile nutrition tracking applications for chronically ill populations with low literacy skills. In: Mobile Health Solutions for Biomedical Applications, IGI Global, 2009 pp. 1-23.

- Lutkevich, B. What is a knowledge base? definition, examples and guide. TechTarget (2022). Available online: https://www.techtarget.com/whatis/definition/ knowledge-base

- Weld H, Huang X, Long S, et al. A survey of joint intent detection and slot filling models in natural language understanding. ACM Computing Surveys 2021;55:156.

- Feldman R. Techniques and applications for sentiment analysis. Communications of the ACM 2013;56:82-9. [Crossref]

- Ekman P. Facial expression and emotion. Am Psychol 1993;48:384-92. [Crossref] [PubMed]

- Ball G, Breese J. Emotion and personality in a conversational agent. Embodied conversational agents 2000:189.

- Sabharwal N, Agrawal A. Introduction to google dialogflow. Cognitive Virtual Assistants Using Google Dialogflow: Develop Com- plex Cognitive Bots Using the Google Dialogflow Platform. 2020:13-54.

- Free C, Phillips G, Watson L, et al. The effectiveness of mobile-health technologies to improve health care service delivery processes: a systematic review and meta-analysis. PLoS Med 2013;10:e1001363. [Crossref] [PubMed]

- Janz NK, Becker MH. The Health Belief Model: a decade later. Health Educ Q 1984;11:1-47. [Crossref] [PubMed]

- Schunk DH, DiBenedetto MK. Motivation and social cognitive theory. Contemporary Educational Psychology 2020;60:101832. [Crossref]

- Bosnjak M, Ajzen I, Schmidt P. The Theory of Planned Behavior: Selected Recent Advances and Applications. Eur J Psychol 2020;16:352-6. [Crossref] [PubMed]

- Kreijns K, Xu K, Weidlich J. Social Presence: Conceptualization and Measurement. Educ Psychol Rev 2022;34:139-70. [Crossref] [PubMed]

- Noar SM, Grant Harrington N, Van Stee SK, et al. Tailored health communication to change lifestyle behaviors. American Journal of Lifestyle Medicine 2011;5:112-22. [Crossref]

- Mheidly N, Fares J. Leveraging media and health communication strategies to overcome the COVID-19 infodemic. J Public Health Policy 2020;41:410-20. [Crossref] [PubMed]

- Ryan RM, Deci EL. Self-determination theory. In: Michalos AC, editor. Encyclopedia of Quality of Life and Well-being Research, Springer, 2022 pp. 1-7.

- Tan NQP, Cho H. Cultural Appropriateness in Health Communication: A Review and A Revised Framework. J Health Commun 2019;24:492-502. [Crossref] [PubMed]

- Tan SM, Liew TW. Designing embodied virtual agents as product specialists in a multi-product category e-commerce: The roles of source credibility and social presence. International Journal of Human–Computer Interaction 2020;36:1136-49. [Crossref]

Cite this article as: Islam A, Chaudhry BM, Islam A. RACares: a conceptual design to guide mHealth relational agent development based on a systematic review. mHealth 2024;10:11.