Working towards a ready to implement digital literacy program

Highlight box

Key findings

• Digital Outreach for Obtaining Resources and Skills (DOORs), a digital literacy program, can improve participants’ digital literacy and serves as a scalable, customizable model that can inform similar efforts.

What is known and what is new?

• As healthcare becomes more reliant on technology, the second digital divide, a lack of skills using technology, continues to persist especially among underserved and marginalized communities.

• This manuscript describes a digital literacy program that has been updated with community feedback to be more accessible and reports participants outcomes using surveys and semi-structured interviews.

What is the implication, and what should change now?

• Digital literacy programming can incorporate access efforts to maximize impact, should build communities trust to integrate their perspectives into curricula, and use DOORs as a starting point to inform similar efforts specific to the needs of their population.

Introduction

Background

With the Coronavirus Disease 2019 (COVID-19) pandemic, healthcare systems across the world have increasingly relied on telehealth and mobile apps to reach patients (1). This widespread adoption of digital health promises increased access to care, especially in mental health (2). While more patients have used digital mental health services since the pandemic, emerging evidence suggests that high-risk patients and those with serious mental illness may be able to actually access fewer services today through telehealth (3). Further, in one study of those receiving mental health telehealth during the height of COVID-19, only 5% reported receiving care for the first time, suggesting digital care may not be bringing new patients into care (4). Mobile health applications for mental health offer a complementary tool for traditional telehealth and have also increased in popularity, but continue to struggle with patient engagement (5-7) which precludes their clinical impact.

The challenges of telehealth and mobile health are multifaceted but one common barrier is that many patients lack the technical skills and confidence to use digital resources (8-11). As more people around the world have access to technology like smartphones, this second digital divide—a lack of digital knowledge and skills represents the new primary challenge for digital health (12,13). For example, a 2021 study assessed the digital skills of adults with serious mental illness across the United Kingdom and found that although over 85% owned a digital device, 42.2% of patients reported having no foundational skills (12). The same study found this population was eager to learn these skills if offered the training (12). This challenge is present not only in high-income countries but also in global mental health settings as access to technology is rapidly expanding but digital literacy is lagging (14).

Low-income communities and racial minorities are more likely to face inequities of broadband access and smartphone ownership, putting these communities at heightened risk for low digital literacy levels (15-17). In addition, digital health apps are not created with racial minorities’ perspectives (18), thus limiting these tools’ usability and deepening digital exclusion. This access and literacy gap are crucial to mitigate as digital inclusion is now considered a social determinant of health (19). In response, calls to promote digital health equity with a particular focus on marginalized populations have become widespread (20,21).

Rationale and knowledge gap

While several grassroots efforts have emerged, many are limited in scope by their regional focus, computer-centric teaching, and lack of evaluation metrics. Impressive efforts to help patients engage with their local clinic or health system have often focused on using a computer to connect to a telehealth visit which does offer important value (22,23). Such programs are often challenging to translate across healthcare systems and regions, given their hyper-specialization to a unique clinic, patient portal, or healthcare system. Most of these programs focus on computers which are practical but may exclude the majority of Medicare and Medicaid patients who connect to digital health services via their smartphones. Finally, a lack of well-established health-based digital literacy scales means that program evaluations are often difficult to compare. Those scales that do exist often focus solely on computer skills (24-26), making an evaluation of smartphone-based digital literacy efforts even more challenging (27). While new scales are a topic of active research (28,29), today demonstrating the effectiveness of digital literacy programs remains challenging.

These challenges likely impact all digital literacy programs, including the smartphone-focused Digital Outreach for Obtaining Resources and Skills (DOORs) program we have advanced over the past 5 years (30,31). The 8-week program teaches participants how to use their smartphones for functional skills in everyday life, achieve wellness goals, and connect to formal health services. The high prevalence of smartphone ownership (32,33), versatility in functions, and standardization of devices make smartphone training more inclusive and accessible than computer-focused training. For the past 5 years, our team has conducted the DOORs program in diverse sites across the Boston community including a first-episode psychosis program, clubhouses for individuals with serious mental illnesses, and an in-patient psychiatric unit. We have also publicly shared DOORs and assisted in its adaption to a peer support program in Los Angeles, a community program in Houston, a rehabilitation program for veterans in Illinois, and supported the program at community mental health centers in California.

Objective

In light of the rapid digitization of healthcare resources and the lack of digital literacy programs that can be generalized to broad populations, we recognize the increasing need for disseminating a digital literacy program. With an established, peer-reviewed curriculum and patient outcomes (30,31,34), we envision that DOORs can be an evidence-based solution to help mitigate the second digital divide across the globe. The aim of this paper is to describe how we have worked to adapt DOORS to make the program more accessible and how participants have responded to these changes.

Methods

Improving the accessibility of the DOORs curriculum

From June to August 2022, the first, Alon N, and second author, Perret S, conducted virtual meetings with partners from the National Digital Inclusion Alliance (35) who were unfamiliar with the DOORs program. Partners were all based in the United States representing community organizations and regional and local government divisions. Alon N and Perret S attended each meeting, presented a PowerPoint presentation about DOORs, and encouraged open conversation from the partners after the presentation. Licensed clinicians (i.e., MD, PhD), who partner with the lab and were familiar with DOORs, added insight into proposed changes collated from these meetings during in-person group discussions with Alon N and Perret S. Two goals emerged from the feedback provided: (I) clearer implementation guidelines and (II) more robust customization of DOORS so the curriculum can meet different communities’ needs.

Procedures

We piloted the new DOORs curriculum and measurement tools with two groups of adults with serious mental illnesses, currently in treatment, in community clubhouses in Boston with participants ranging in ages 25 through 71 with a mean age of 55. Participants were recruited through flyers and clubhouse staff’s encouragement. Any participant who was present in the clubhouse during the time of a specific session was able to attend. Even those without a smartphone could attend as the research team brought smartphones to be used during the sessions. Both groups met every week in their respective clubhouses from April to June 2022 for a total of 8 weeks. Sessions lasted one and a half hours long and were taught by the research team from the Division of Digital Psychiatry and trained volunteers. Participants were given a survey at the beginning of each session and at the conclusion of each session. More information about the measurement tool is provided in the next section.

DOORs was run as an optional, community service, and to remain accessible to all, participants were not pre-registered nor mandated to attend all eight sessions. Yet, participants were encouraged to attend all eight sessions, but, due to factors such as employment, COVID-19 infection, and doctor’s appointments, attendance fluctuated. Though attendance fluctuated, most of the participants remained engaged for the entire session, defined as those who completed a pre and post survey. Session 2 had the highest attendance of 19 participants (summed from both sites) and 89% of participants remained engaged for the entire lesson, while session 6 had the lowest attendance of 10 participants (summed from both sites) and 90% remained engaged for the entire lesson. At the conclusion of the 8 weeks, any participant who had attended at least one session of DOORs was presented with the opportunity to complete an in-person semi-structured interview led by a member of the research team. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The Institutional Review Board of Beth Israel Deaconess Medical Center approved data collection (protocol No. 2022P000923). Per our IRB approval, verbal informed consent was provided prior to the survey and interview’s completion, as this was a lowrisk study that did not collect personal health information. All methods were carried out in accordance with relevant guidelines and regulations.

Measurement tools

As previously measured, there is no standard scale that measures digital literacy. Thus, throughout DOORs, we have continued to refine our own measurement tool to quantify participants’ improvement and inform future directions of the program. Previous DOORs surveys have been informed by functional outcomes scales as these scales have been used to assess specific, targeted skills in adults with serious mental illnesses (31,36,37). However, there is not a validated functional outcome scale that assesses patients’ digital literacy. Thus, we have created our own surveys that incorporate these scales’ theoretical underpinning but assess the digital literacy skills that are presented in DOORs sessions.

Due to attendance fluctuations between sessions, eight surveys that correspond to the eight sessions were developed. Each survey only assesses participants’ comfort and ability to perform the digital skills taught in the session conducted that day. Thus, all items across the eight surveys differ. For example, session 3 teaches participants how to use the calendar and maps app. Thus, the survey participants complete at the beginning and end of session 3 will only assess their ability and comfort to use the calendar and maps app. In total, the eight surveys assess 29 digital skills ranging from basic (i.e., how to make a phone call) to more complex competencies (i.e., how to download an app).

The surveys contain two types of questions. First, “functional” questions were designed to determine participant’s digital literacy more objectively and mitigate some of the bias inherent to self-report. For example, to test if participants knew how to connect their device to Wi-Fi, they were presented pictures of six apps and asked to circle the app that would allow them to connect to Wi-Fi. These questions were either multiple choice or short answer. After the completion of these functional questions, participants were asked the second type of question, which were confidence-based. For example, participants were asked “I can connect to Wi-Fi” with an one indicating strongly disagree and a ten indicating strongly agree.

We recognize that creating our own measurement tool comes with limitations. Yet, in our latest iteration, we continue to mitigate limitations of the surveys previously used in the program as exemplified by the functional questions, which work to reduce self-report bias. Yet, all scales, including our own, that measure facility with technology rely on self-reports (24-29), and thus susceptible to the bis that self-reports introduce. A copy of an updated survey for one session of DOORs is provided in the Appendix 1.

Semi-structured interviews

To gain an understanding of participants’ thoughts, feelings, and experiences in the program, semi-structured interviews were conducted. Questions asked participants about their smartphone use before and after participation in DOORs and their experience throughout the program’s duration. Adherence to the interview guide was encouraged, yet flexibility was allowed to maintain the fluidity of the conversation. Questions were excluded if participants expressed discomfort in answering. A copy of the interview guide is included in the Appendix 2.

Statistical analysis

For the surveys, participants’ scores were excluded from the analytical set if they did not complete both a pre and post survey. For functional-based questions, participants’ responses were scored as either zero (wrong answer) or one (correct answer). Individual participants’ scores were averaged across all the functional-based questions that were assessing the same digital skill. For confidence-base questions, participants answer across the 1–10 numerical scale were recorded. Paired t-tests were conducted to determine if differences between pre and post surveys were statistically significant. Due to attendance fluctuations, each digital skill was analyzed separately to generate 29 pre and posttest comparisons.

For semi-structured interviews, all interviews were audio recorded, and recordings were transcribed verbatim and re-listened to ensure accuracy by Alon N. All data were analyzed using NVivo in accordance to the thematic analysis framework outlined by Braun and Clarke (38). Alon N and Perret S independently and fully coded the transcripts and generated the initial codes. All authors aggregated the initial codes to produce larger themes and compared these themes to the original transcripts to ensure their encompassment. The refinement of the themes and any discrepancies were discussed by the research team until a consensus was reached.

Results

Improving the accessibility of the DOORs curriculum

Our efforts are summarized in Figure 1 and include an updated DOORs curriculum, updated facilitator manual, an online platform with a learning management system, standardized training, patient-facing educational handouts, consolidation of all DOORs materials into a single package that is ready to be shared with other groups, and implementation of a single-session intervention model. In addition, we are currently translating all materials to Spanish to enable DOORS to reach this population.

In 2021, the DOORs curriculum was updated from its previous focus on helping participants increase their use of smartphones to achieve wellness goals (30) to a more skill-based foundation (31). The skill-based curriculum teaches basic smartphone utility, and thus DOORs can benefit a broad population. For example, learning how to organize one’s events on a calendar and how to send an email can promote any individual’s productivity and independence. The curriculum also reflects changes in smartphone technology such as how to use a device without a home button and the increasing reliance on telehealth services. To ensure all participants can partake, the first session of DOORs determines eligibility and sign up for a free smartphone provided by the Affordable Connectivity Program’s (ACP) Lifeline Assistance (39). We have also created interactive brochures that outline a framework of how to evaluate mobile health applications (40,41). All DOORs materials—the facilitator manual, patient-facing handouts, online platform, promotional flyers, and PowerPoint slides—can be accessed and edited by partners running the course.

In response to the pandemic, we have also translated DOORs onto an online platform found at skills.digitalpsych.org. For instructors, the online platform can allow for the widespread dissemination of DOORs. The learning management system embedded on the website allows instructors to invite specific participants to complete the modules and track each participants’ progress. For participants, the online platform allows them to progress through the curriculum at their own pace and view educational materials at any time, promoting their learning and retention of digital skills.

To facilitate staff becoming a DOORs instructor, we have standardized a training pathway. First, staff complete the digital navigator training, a peer-reviewed curriculum that teaches staff how mobile devices can be integrated into care (42,43). Second, staff shadow and assist a digital navigator who is experienced in leading DOORs. We have piloted this training pathway on a group of college students and have received positive feedback from these students and DOORs participants. The digital navigator training ensures a scalable pathway to increase workforce capacity to teach DOORs.

Finally, we recognize that implementing a multi-week-long program does not suit all organizations’ needs and workflow. In response, we have designed a single-session intervention model of DOORs during which digital navigators engage participants in a group discussion about their smartphone use and knowledge of mental health apps and then teach participants how to choose a mental health app that aligns with their needs. Currently, digital navigators utilize the M-Health Index and Navigation Database (MIND) and its user-facing website (mindapps.org) (41). As reported, most patients with a mental illness have not downloaded a mental health app (44,45), and thus, may benefit from education around how to choose a mental health app that is appropriate for their needs. We have piloted this curriculum at multiple locations whose patient turnover rate is too quick to suit a multi-week program, including in an in-patient psychiatric unit and a temporary residential program for patients seeking mental health services (34).

Participant survey outcomes

Both groups that were included in the study completed all 8 weeks of DOORs programming. One hundred and five pre-session surveys were collected, and 92 post-session surveys were collected across 8 weeks resulting in a final analytic dataset of 92 unique pre- and post-session surveys.

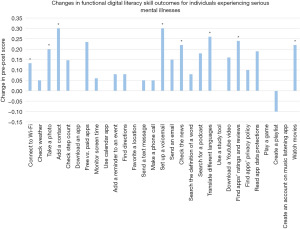

As shown below in Figure 2, statistically significant improvement (P<0.05) was seen in 8/29 of the digital skills assessed by the functional questions in the survey. 6/29 skills had no change after participation, and one skill—creating a playlist—demonstrated a depletion in skill. The first DOORs session, which teaches core smartphone skills, had the most statistically significant improvement with 3/4 of the digital skills taught in that session demonstrating significant changes.

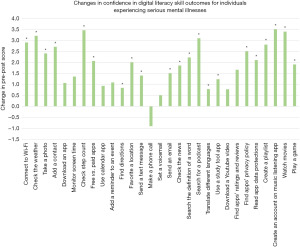

For confidence-based questions, statistically significant improvement (P<0.05) was seen in 21/29 of the digital skills assessed as seen in Figure 3. One skill (making a phone call) demonstrated a depletion in confidence. The first (teaching core smartphone skills), fifth (how to use one’s smartphone to keep informed), and last (enjoying downtime with the use of a smartphone) DOORs session showed the most significant improvement as all digital skills taught in these sessions demonstrated significantly improved confidence.

Semi structured interviews

Three themes were generated: awareness of divide, patient-centered design, and expanded skills and confidence. Themes and exemplary quotes are highlighted in Table 1.

Table 1

| Theme | Exemplary quote |

|---|---|

| Awareness of divide | “I probably wouldn’t have gotten a new cellphone. I would have kept my flip phone. Flip phones don’t do a lot do they? You can just call out and call in. I’ve had one since they came out and I never thought about getting another phone because I said to myself, I don’t know how to use a smartphone and now that I went through this program, I know how to use it.” |

| “There were few of us who did not know much of anything just the breadth of it just getting an overview was interesting.” | |

| Patient-centered design | “I would tell anybody who takes part in the group come in with an open mind because there is very receptive staff here that can work with you and work on your level so that you don’t get stressed out or anything so that you are picking up the stuff with ease.” |

| “I think it was important that we have the handouts…that’s the only way that I can learn if there is a print out of something.” | |

| Expanded skills and confidence | “I knew where to find things more. I know how to appropriately download an app. I feel safe with emailing and texting and talking on there.” |

| “Now I feel really confident using the phone. I know there’s still some other things that I can learn but overall I feel like I did pick up a lot of stuff because when I first had the thing with the apps I had like maybe 3 or 4 apps now I have all the different apps that I like to use and a lot of them like I said all of my doctors at different parts of the hospital all their numbers are in there and now I don’t have to worry about having to have the nurse come in if I am having a crisis you know I could just press them and I’m talking directly to the hospital.” |

First, participants described limited smartphone use prior to DOORs such as only texting and calling or checking the weather. Participate were aware that they lacked skills that seemed pervasive and necessary to contribute to modern society. Despite their limited use, participants were reliant on their devices as some described it as “their lifeline”, and thus were eager to learn more digital skills.

Participants reported that during their participation in DOORs, they enjoyed coming to the program as it introduced practical skills in a well-paced, hands-on way. They praised the instructors for being flexible and tailoring materials to their needs especially the patient facing handouts that some cited as crucial to their comprehension and independent replication of the skills taught. Some proposed a few improvements to the program including adding an online banking and QR code module.

After DOORs, participants reported marked improvement in their facility with a smartphone and felt more confident. An array of expanded use cases of participants’ smartphones was reported including contacting health professionals, tracking federal benefits, watching YouTube videos, recognizing fraudulent messages and downloading an app safely. Participants said they were more likely to use their smartphone after the program and some were even inspired to buy a smartphone after gaining more confidence.

Discussion

Key findings and explanation of findings

As healthcare systems continue to expand digital technologies, there is a need for digital literacy programs like DOORs. By expanding the program accessibility, improving scales to measure its efficacy, and receiving participant feedback, DOORs is better positioned to reach more people while remaining scalable.

Updates to the curriculum, facilitator training, and the modality the program reflect our efforts to make DOORs more accessible. The updated content around smartphone accessibility features reflects changes in technology, while the content around patient portals and telehealth reflects the changes in how care is being delivered in the COVID-19 era. By enrolling eligible participants into the Lifeline Program during the first session and then teaching participants how to use their new device in subsequent weeks, our curriculum both improves access to and comfort using devices. Though Lifeline has its limitations, it offers a free smartphone and data plan to low-income citizens and benefit recipients, providing a gateway to learn basic digital literacy skills and future ownership of a more advanced device if needed. Yet, uptake of the program is still low and additional education is needed (46). Digital literacy programming can incite interest in Lifeline and other access programs as some of our participants were only interested in smartphones after learning how to use one. By combining access efforts with a digital literacy program, DOORs can address multiple determinants of digital health equity (15).

Our survey and interview results suggest that the new curriculum of DOORs can improve the digital literacy level and confidence of participants. It is notable that for both functional and confidence questions, the first session, teaching core smartphone skills, demonstrated the most significant improvement. This result suggests that, prior to DOORs, participants lacked foundational digital literacy skills, which is also corroborated by other reports (12). Some results are more challenging to interpret as participants demonstrated a depletion in their confidence in making a phone call and ability to create a playlist. Though this result is multifaceted, an overestimation of initial ability could account for these reductions. After DOORs, participants felt significantly more confident in 72% of the digital skills assessed and this increased confidence was also corroborated by participant interviews. These results suggest that DOORs can improve participants’ technical skills and can address a commonly reported barrier of mobile health adoption (11).

Strengths and limitations

Our study has several strengths. DOORs provides individual-level digital literacy support, a key intervention for promoting digital health equity (15). Our team has been delivering the program for the past 5 years and has established long-term relationships in the community clubhouses that we serve. We have leveraged these relationships to build trust among participants and staff and integrate their voices into the program, a cited marker of success for community engagement (15). For example, our patient-facing handouts were created due to their direct feedback, and as the interviews highlight, contributed to participants’ improvements.

As we continue to refine DOORs, we anticipate the following challenges. First, quantifying the impact of a digital literacy program is difficult. As previously described, there are few validated scales that measure participants’ facility with a mobile device, and none have been validated on the population that DOORs serves, those with a serious mental illness. Most validated scales are assessed among the general population (28,29), who tend to have higher baseline digital literacy and may not be appropriate for those with limited literacy. Though our own measurement tool has been iterated over years of working with those with serious mental illnesses, it is not a validated scale, which limits generalizability. In addition, the digital skills assessed in our surveys reflect the latest updates to technology and cover a broad array of skills that are specific to DOORs, while other scales are specific to health-related behaviors (28), outdated (27), or limited to only a few technical skills (29). Yet, we recognize that the specificity of our survey to the program might further limit its generalizability. Second, the patient outcomes reported in this paper are limited by the lack of a control group. Though we report these outcomes to assess patients’ acceptability of our measurement tool and inform the direction of the program, in the future, we will work to assess the outcomes of DOORs using more robust research methods with a control group.

Comparison with similar research

While research on digital literacy is increasing, there is no clear standardization of research methods, curriculum themes, or skills taught (47). To our knowledge, DOORs is the only smartphone-based program that focuses on essential digital skills with years of community experience and scientific research. Other digital literacy programs focus on digital health literacy to help patients access their patient portal or telehealth, but do not cover basic smartphone skills (48,49). Other community programs teach basic digital literacy, but are laptop-based (50). While other digital literacy programs and DOORs differ in modalities and curricula, participants demonstrate similar gaps in basic technology literacy (12). There is a continued need for evidence-based digital literacy programs that can serve a broad population and teach essential digital skills for daily life.

Implications and actions needed

We know the current modules and updates to DOORs will not fit every population, but we hope other teams can use the program as a starting point or model for their own digital literacy efforts. Digital literacy programming should be coupled with access efforts to maximize impact. We applaud efforts to expand the library of modules included in DOORs as new teams should create local and custom content specific to their populations. With this library of content, digital navigators could pick the series of modules most important for their population and deliver a unique DOORs course without having to develop new infrastructure. This streamlined customization can be especially useful when serving marginalized communities who despite facing the deepest inequities, are often not included in digital health development (18). Community feedback should be integrated into any digital literacy program, as the most praised part of DOORs, the patient facing handouts, were created due to direct participant feedback. This highlights the value of building trust and fostering collaboration in the community. As the digital divide pervades a range of populations and geographic locations (51), DOORs is a ready to implement opportunity that can promote digital inclusion or inform similar efforts.

Conclusions

DOORs is a peer-reviewed digital literacy that can help mitigate the second digital divide. The updated curriculum and new assessment scales reflect necessary adaptions that make the program more accessible and easier to assess its impact on an individual level. As the healthcare system continues to increase its reliance on mobile devices, programs like DOORs are well positioned to promote access and equity around digital health resources.

Acknowledgments

We thank all participants and community partners for participating in DOORs. We thank all clinicians, participants, and the National Digital Inclusion Alliance community for their invaluable feedback on the DOORs program.

Funding: This work was supported by the Sydney Baer Jr. Foundation.

Footnote

Data Sharing Statement: Available at https://mhealth.amegroups.com/article/view/10.21037/mhealth-23-13/dss

Peer Review File: Available at https://mhealth.amegroups.com/article/view/10.21037/mhealth-23-13/prf

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://mhealth.amegroups.com/article/view/10.21037/mhealth-23-13/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). This study was reviewed by the Beth Israel Deaconess Medical Center IRB (protocol No. 2022P000923). Per our IRB approval, verbal informed consent was obtained from all participants as this was a low-risk study that did not collect personal health information. All methods were carried out in accordance with relevant guidelines and regulations.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Smith AC, Thomas E, Snoswell CL, et al. Telehealth for global emergencies: Implications for coronavirus disease 2019 (COVID-19). J Telemed Telecare 2020;26:309-13. [Crossref] [PubMed]

- Torous J, Jän Myrick K, Rauseo-Ricupero N, et al. Digital Mental Health and COVID-19: Using Technology Today to Accelerate the Curve on Access and Quality Tomorrow. JMIR Ment Health 2020;7:e18848. [Crossref] [PubMed]

- Rosanwo D. Telehealth Holds Steady As Americans Warm to In-Person Medical Visits The Harris Poll. 2021. Available online: https://theharrispoll.com/briefs/time-mental-telehealth-june-2021/

- Nouri S, Khoong EC, Lyles CR, et al. Addressing Equity in Telemedicine for Chronic Disease Management During the Covid-19 Pandemic. Catalyst non-issue content 2020;1. doi:

10.1056/CAT.20.0123 . - Deady M, Glozier N, Calvo R, et al. Preventing depression using a smartphone app: a randomized controlled trial. Psychol Med 2022;52:457-66. [Crossref] [PubMed]

- Nwosu A, Boardman S, Husain MM, et al. Digital therapeutics for mental health: Is attrition the Achilles heel? Front Psychiatry 2022;13:900615. [Crossref] [PubMed]

- Swisher K. Sway. New York Times 2021. Available online: https://www.nytimes.com/2021/07/22/opinion/sway-kara-swisher-oren-frank.html?showTranscript=1

- Dinkel D, Harsh Caspari J, Fok L, et al. A qualitative exploration of the feasibility of incorporating depression apps into integrated primary care clinics. Transl Behav Med 2021;11:1708-16. [Crossref] [PubMed]

- Steare T, Giorgalli M, Free K, et al. A qualitative study of stakeholder views on the use of a digital app for supported self-management in early intervention services for psychosis. BMC Psychiatry 2021;21:311. [Crossref] [PubMed]

- Sükei E, Norbury A, Perez-Rodriguez MM, et al. Predicting Emotional States Using Behavioral Markers Derived From Passively Sensed Data: Data-Driven Machine Learning Approach. JMIR Mhealth Uhealth 2021;9:e24465. [Crossref] [PubMed]

- Jacob C, Sezgin E, Sanchez-Vazquez A, et al. Sociotechnical Factors Affecting Patients' Adoption of Mobile Health Tools: Systematic Literature Review and Narrative Synthesis. JMIR Mhealth Uhealth 2022;10:e36284. [Crossref] [PubMed]

- Spanakis P, Wadman R, Walker L, et al. Measuring the digital divide among people with severe mental ill health using the essential digital skills framework. Perspect Public Health 2022; Epub ahead of print. [Crossref] [PubMed]

- Robotham D, Satkunanathan S, Doughty L, et al. Do We Still Have a Digital Divide in Mental Health? A Five-Year Survey Follow-up. J Med Internet Res 2016;18:e309. [Crossref] [PubMed]

- Khan A, Shrivastava R, Tugnawat D, et al. Design and Development of a Digital Program for Training Non-Specialist Health Workers to Deliver an Evidence-Based Psychological Treatment for Depression in Primary Care in India. J Technol Behav Sci 2020;5:402-15. [Crossref] [PubMed]

- Lyles CR, Nguyen OK, Khoong EC, et al. Multilevel Determinants of Digital Health Equity: A Literature Synthesis to Advance the Field. Annu Rev Public Health 2023;44:383-405. [Crossref] [PubMed]

- Noori S, Jordan A, Bromage W, et al. Navigating the digital divide: providing services to people with serious mental illness in a community setting during COVID-19. SN Soc Sci 2022;2:160. [Crossref] [PubMed]

- Perrin A. Mobile Technology and Home Broadband 2021. Pew Research Center 2021. Available online: https://www.pewresearch.org/internet/2021/06/03/mobile-technology-and-home-broadband-2021/

- Friis-Healy EA, Nagy GA, Kollins SH. It Is Time to REACT: Opportunities for Digital Mental Health Apps to Reduce Mental Health Disparities in Racially and Ethnically Minoritized Groups. JMIR Ment Health 2021;8:e25456. [Crossref] [PubMed]

- Sieck CJ, Sheon A, Ancker JS, et al. Digital inclusion as a social determinant of health. NPJ Digit Med 2021;4:52. [Crossref] [PubMed]

- Lyles CR, Wachter RM, Sarkar U. Focusing on Digital Health Equity. JAMA 2021;326:1795-6. [Crossref] [PubMed]

- Equity in Telehealth: Taking Key Steps Forward. American Medical Association; 2022.

- Pichan CM, Anderson CE, Min LC, et al. Geriatric Education on Telehealth (GET) Access: A medical student volunteer program to increase access to geriatric telehealth services at the onset of COVID-19. J Telemed Telecare 2021; Epub ahead of print. [Crossref] [PubMed]

- Triana AJ, Gusdorf RE, Shah KP, et al. Technology Literacy as a Barrier to Telehealth During COVID-19. Telemed J E Health 2020;26:1118-9. [Crossref] [PubMed]

- Norman CD, Skinner HA. eHEALS: The eHealth Literacy Scale. J Med Internet Res 2006;8:e27. [Crossref] [PubMed]

- Karnoe A, Furstrand D, Christensen KB, et al. Assessing Competencies Needed to Engage With Digital Health Services: Development of the eHealth Literacy Assessment Toolkit. J Med Internet Res 2018;20:e178. [Crossref] [PubMed]

- Sengpiel M, Jochems N. Validation of the Computer Literacy Scale (CLS). In: Zhou J, Salvendy G. editors. Human Aspects of IT for the Aged Population. Design for Aging. ITAP 2015. Cham: Springer; 2015:365-75.

- Lee J, Lee EH, Chae D. eHealth Literacy Instruments: Systematic Review of Measurement Properties. J Med Internet Res 2021;23:e30644. [Crossref] [PubMed]

- Nelson LA, Pennings JS, Sommer EC, et al. A 3-Item Measure of Digital Health Care Literacy: Development and Validation Study. JMIR Form Res 2022;6:e36043. [Crossref] [PubMed]

- van der Vaart R, Drossaert C. Development of the Digital Health Literacy Instrument: Measuring a Broad Spectrum of Health 1.0 and Health 2.0 Skills. J Med Internet Res 2017;19:e27. [Crossref] [PubMed]

- Hoffman L, Wisniewski H, Hays R, et al. Digital Opportunities for Outcomes in Recovery Services (DOORS): A Pragmatic Hands-On Group Approach Toward Increasing Digital Health and Smartphone Competencies, Autonomy, Relatedness, and Alliance for Those With Serious Mental Illness. J Psychiatr Pract 2020;26:80-8. [Crossref] [PubMed]

- Rodriguez-Villa E, Camacho E, Torous J. Psychiatric rehabilitation through teaching smartphone skills to improve functional outcomes in serious mental illness. Internet Interv 2021;23:100366. [Crossref] [PubMed]

- Franco OH, Calkins ME, Giorgi S, et al. Feasibility of Mobile Health and Social Media-Based Interventions for Young Adults With Early Psychosis and Clinical Risk for Psychosis: Survey Study. JMIR Form Res 2022;6:e30230. [Crossref] [PubMed]

- Firth J, Cotter J, Torous J, et al. Mobile Phone Ownership and Endorsement of "mHealth" Among People With Psychosis: A Meta-analysis of Cross-sectional Studies. Schizophr Bull 2016;42:448-55. [Crossref] [PubMed]

- Camacho E, Torous J. Impact of Digital Literacy Training on Outcomes for People With Serious Mental Illness in Community and Inpatient Settings. Psychiatr Serv 2023;74:534-8. [Crossref] [PubMed]

- National Digital Inclusion Alliance. Join The Community. Available online: https://www.digitalinclusion.org/join/

- Patterson TL, Goldman S, McKibbin CL, et al. UCSD Performance-Based Skills Assessment: development of a new measure of everyday functioning for severely mentally ill adults. Schizophr Bull 2001;27:235-45. [Crossref] [PubMed]

- Mausbach BT, Harvey PD, Goldman SR, et al. Development of a brief scale of everyday functioning in persons with serious mental illness. Schizophr Bull 2007;33:1364-72. [Crossref] [PubMed]

- Braun V, Clarke V. Using thematic analysis in psychology. Qualitative Research in Psychology 2006;3:77-101. [Crossref]

- Assurance Wireless. What is Lifeline Service? Available online: https://www.assurancewireless.com/lifeline-services/what-lifeline

- Henson P, David G, Albright K, et al. Deriving a practical framework for the evaluation of health apps. Lancet Digit Health 2019;1:e52-4. [Crossref] [PubMed]

- Lagan S, Sandler L, Torous J. Evaluating evaluation frameworks: a scoping review of frameworks for assessing health apps. BMJ Open 2021;11:e047001. [Crossref] [PubMed]

- Wisniewski H, Torous J. Digital navigators to implement smartphone and digital tools in care. Acta Psychiatr Scand 2020;141:350-5. [Crossref] [PubMed]

- Wisniewski H, Gorrindo T, Rauseo-Ricupero N, et al. The Role of Digital Navigators in Promoting Clinical Care and Technology Integration into Practice. Digit Biomark 2020;4:119-35. [Crossref] [PubMed]

- Beard C, Silverman AL, Forgeard M, et al. Smartphone, Social Media, and Mental Health App Use in an Acute Transdiagnostic Psychiatric Sample. JMIR Mhealth Uhealth 2019;7:e13364. [Crossref] [PubMed]

- Torous J, Wisniewski H, Liu G, et al. Mental Health Mobile Phone App Usage, Concerns, and Benefits Among Psychiatric Outpatients: Comparative Survey Study. JMIR Ment Health 2018;5:e11715. [Crossref] [PubMed]

- Universal Service Administrative Company. Program Data. Available online: https://www.usac.org/lifeline/resources/program-data/

- Tinmaz H, Lee YT, Fanea-Ivanovici M, et al. A systematic review on digital literacy. Smart Learn Environ 2022;9:21. [Crossref]

- Rodríguez Parrado IY, Achury Saldaña DM. Digital Health Literacy in Patients With Heart Failure in Times of Pandemic. Comput Inform Nurs 2022;40:754-62. [Crossref] [PubMed]

- Lyles CR, Tieu L, Sarkar U, et al. A Randomized Trial to Train Vulnerable Primary Care Patients to Use a Patient Portal. J Am Board Fam Med 2019;32:248-58. [Crossref] [PubMed]

- Tech Goes Home. Our Mission. Available online: https://www.techgoeshome.org/our-mission

- Gallardo R. The State of the Digital Divide in the United States. 2022. Available online: https://pcrd.purdue.edu/the-state-of-the-digital-divide-in-the-united-states/

Cite this article as: Alon N, Perret S, Torous J. Working towards a ready to implement digital literacy program. mHealth 2023;9:32.